Key Takeaways

- OpenAI’s new o1 models focus on reasoning over prediction.

- The o1 models choose strategies, consider options, and refine methods before responding.

- The o1 models can solve complex problems in reasoning, math, and coding.

OpenAI has released two brand-new AI models into the wild, and these are something very different from what’s come before. What makes these models different is that, unlike current models, these new o1 models have been trained to reason. Instead of instantly generating a response that populates as it goes, like current ChatGPT models do, these new models think first, consider ways to approach the problem, and can refine their methods, all before they output anything. The result is that the o1 models are capable of solving far more complex reasoning, math, and coding problems than other current models.

If you’re a ChatGPT Plus or Team subscriber, you can try out the new models, called o1-preview and o1-mini, right now in the ChatGPT app. I decided to take them for a run to see just how well they perform.

What is OpenAI’s new o1 model?

A new type of model that’s focused on reasoning rather than prediction

The reason that current AI chatbots aren’t very good at solving even simple problems is because of the way that they work. Essentially, models such as GPT-4o generate a response a word at a time, using its training and algorithms to predict the most likely thing to put next in order to satisfy the prompt. This is why you can see your responses being generated a word at a time.

This works brilliantly for some uses, such as writing a story or rewording an email to make it more professional. However, it’s not much help for solving problems, unless those exact problems appeared in its training. Essentially, GPT-4o tells you what it thinks you most likely want to hear, even if that’s not actually much help.

According to OpenAI, o1 was trained to think about how to solve a problem before it starts responding.

According to OpenAI, the o1 models were trained to think about how to solve a problem before they starts responding. The models have been trained to try multiple different strategies, spot mistakes, and refine their approach. All of this takes time, so rather than the almost instant response that you get from GPT-4o, the new o1 models can take a significant amount of time before they start to reply. You can see a summary of what the model is doing while you wait, such as ‘testing parameters’ and ‘assessing the claim’.

OpenAI’s new o1 models are available now for ChatGPT Plus and Team users. There are two models available: o1-preview and o1-mini, with o1-mini being a smaller, less capable model. There are message limits of 30 weekly messages for o1-preview, and 50 weekly messages for o1-mini. The ‘preview’ in the title indicates that this isn’t the finished product; Open AI says that the next update to the o1 models will be far superior.

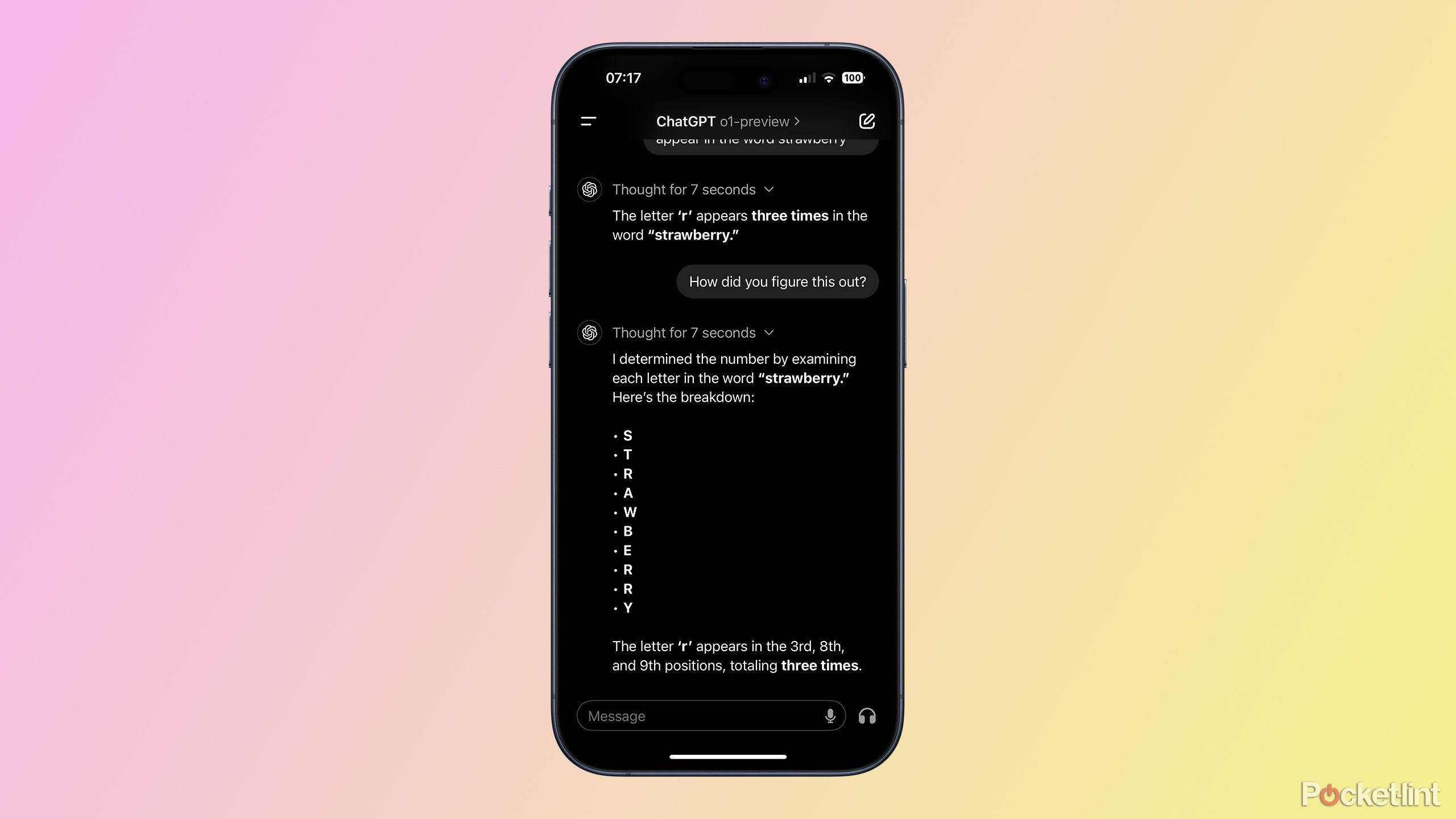

Counting the letters in strawberry with the o1 model

A simple test that most AI chatbots fail

I decided to give the new o1 models a try to see how good they are in their current state. The first thing that I had to try was to see whether or not these new models could tell me how many times the letter R appears in the word strawberry.

It may seem like a dumb thing to ask, but it’s a perfect example of where current models fall down. If you ask this question to most AI chatbots, they get it wrong, with most of them saying two. This is because the chatbot isn’t actually counting the letters at all, it’s just predicting what the response with the highest probability of being useful will be.

I asked o1-preview how many times the letter R appears in the word strawberry, and it thought for seven seconds, before responding with the correct answer (which is three, obviously). Now you or I can do this faster than seven seconds, but most other AI chatbots can’t get it right at all.

I followed up by asking for its reasoning, and it explained that it examined each letter and then counted each time the letter was an R, exactly how a human would do it. This is encouraging.

I then tried o1-mini, which thought for two seconds, and then gave me an answer of two. After telling it to try again, it was able to reach the correct answer, but it’s clear that o1-preview is much more effective at reasoning than the mini version.

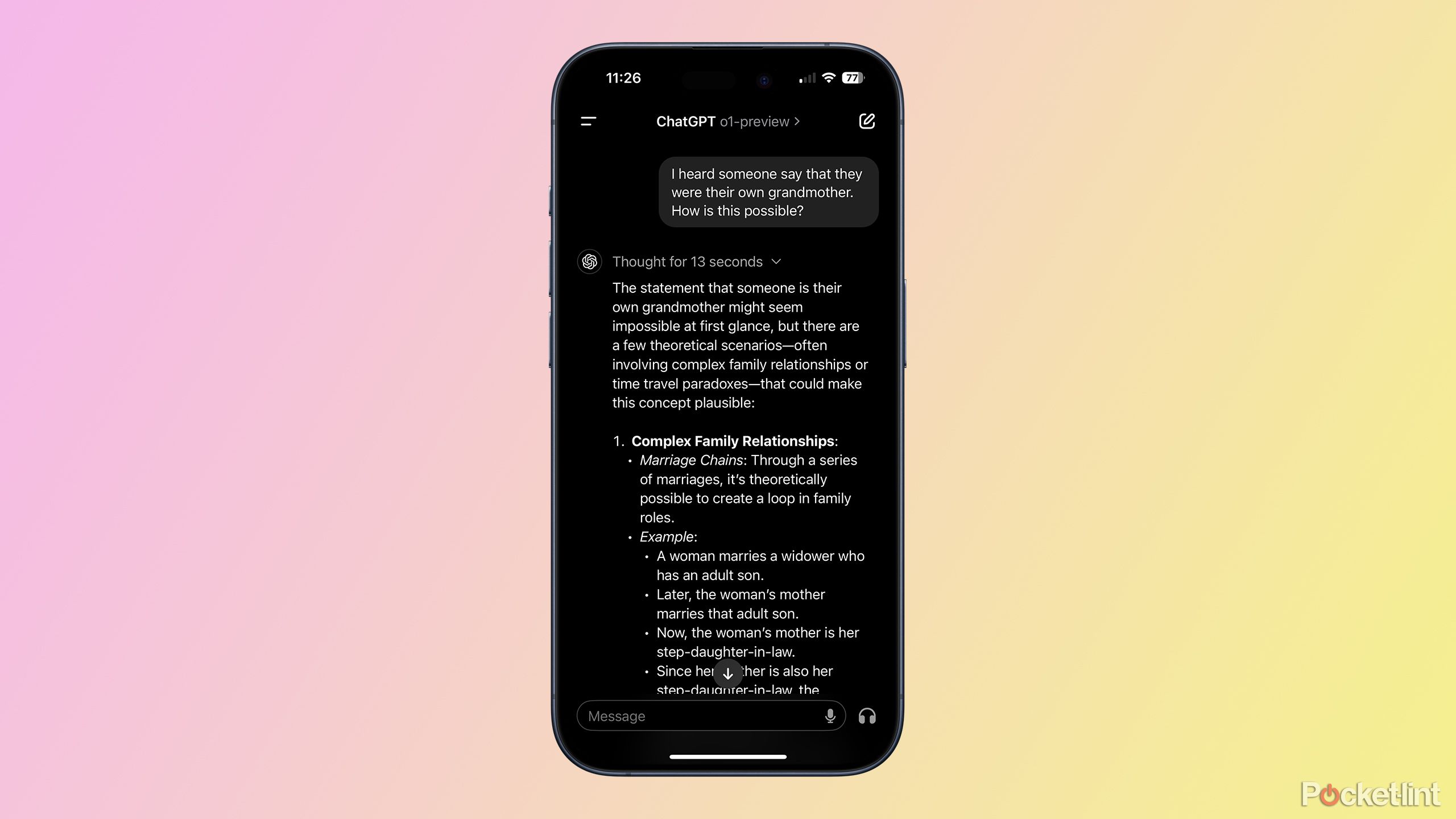

Solving more complex reasoning problems

The o1-preview model was quicker to the answer than I was

I once heard a song on the radio about a man who was his own grandpa. I’d only heard the words of the chorus, and it took me a long time to figure out how this could ever be true.

I asked o1-preview the same question. To ensure that it wasn’t just pulling from training data about that song, I switched it to being how I could be my own grandma. The o1-preview model thought for 13 seconds, and then gave me two possible scenarios; the one from the song (you marry a widower with an adult son, who then marries your own mother) and an alternative solution involving time travel.

Solving the problem took o1-preview much less time than I took, and its reasoning was sound. Pretty impressive.

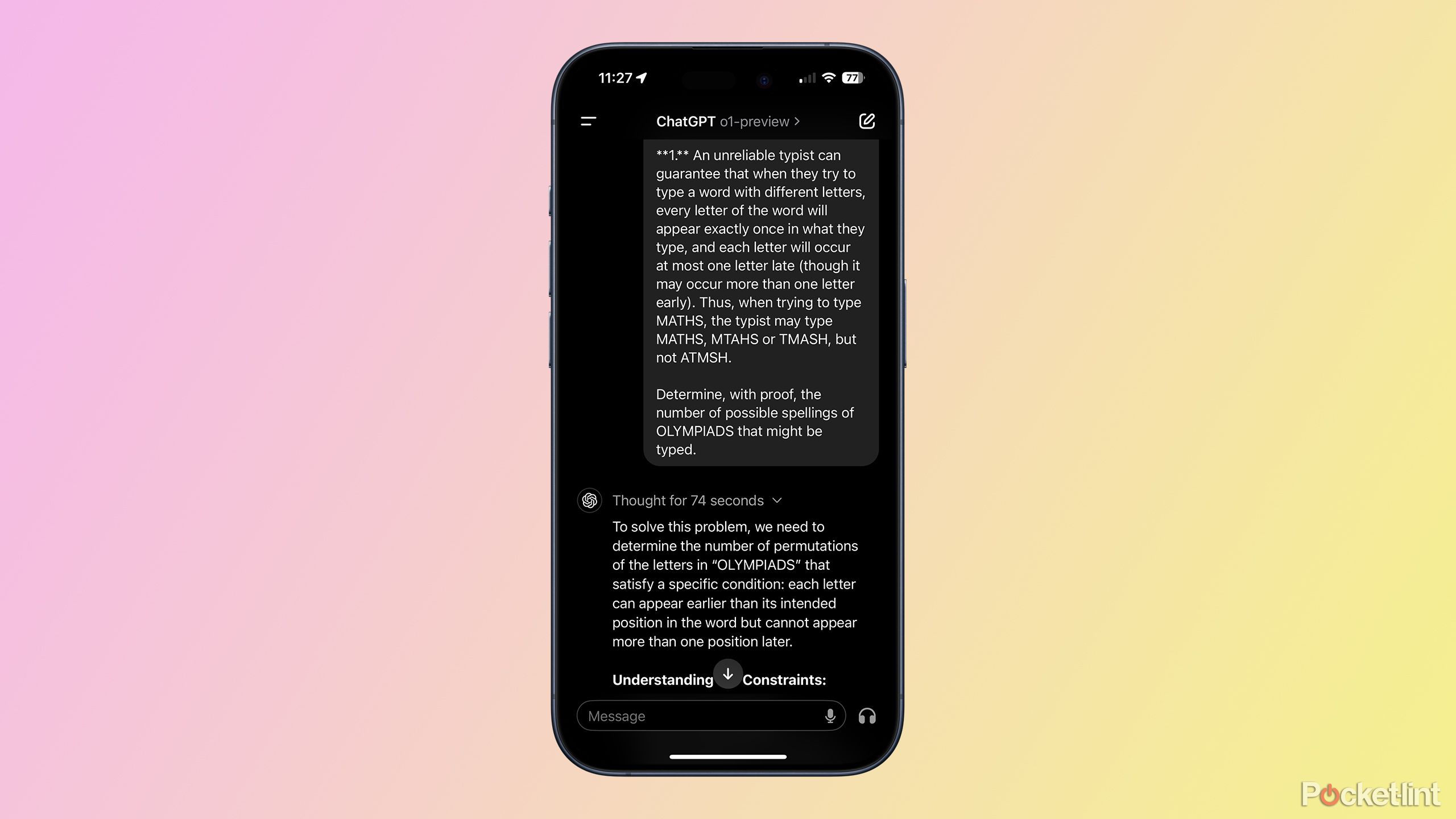

Solving challenging math problems

It’s good, but not as good as OpenAI promises just yet

OpenAI claims that the next version of o1, which has not yet been released, scored 83% on a qualifying exam for the International Mathematics Olympiad (IMO). These exams involve mathematical questions that require complex reasoning to completely solve. I decided to give o1-preview a try on some similar questions.

I used the latest version of the British Mathematics Olympiad paper, which is one of the exams that can qualify you for the IMO if you do well enough. It comprises six questions, and candidates have three hours to complete it.

The o1-preview model started well. It managed to answer the first question (the easiest) correctly and provided clear reasoning that would have earned it full marks. However, things went downhill from there.

Of the six questions, o1-preview answered two to a standard which would have earned it a good score, and in two other questions it reached the correct solution but was not able to provide adequate proof that this was the only solution, something that is key to scoring well on the exam. On two questions, it failed to get close to a correct solution.

Overall, o1-preview probably scored around 25 out of 60, which is far from the 83% promised by the next update of o1. It wouldn’t be enough to qualify for the International Olympiad, but the o1-preview model would have received a Merit medal which I’m sure it would be proud of.

Overall, o1-preview probably scored around 25 out of 60, which is far from the 83% promised by the next update of o1. It wouldn’t be enough to qualify for the International Olympiad, but the o1-preview model would have received a Merit medal which I’m sure it would be proud of.

Here’s the crucial thing, however. I gave GPT-4o the same questions, and it didn’t come close to getting a single one of them completely right. The step up in reasoning from GPT-4o to o1-preview is significant, and is genuinely impressive, even if the model doesn’t yet reach the heights that OpenAI says it will be able to eventually.

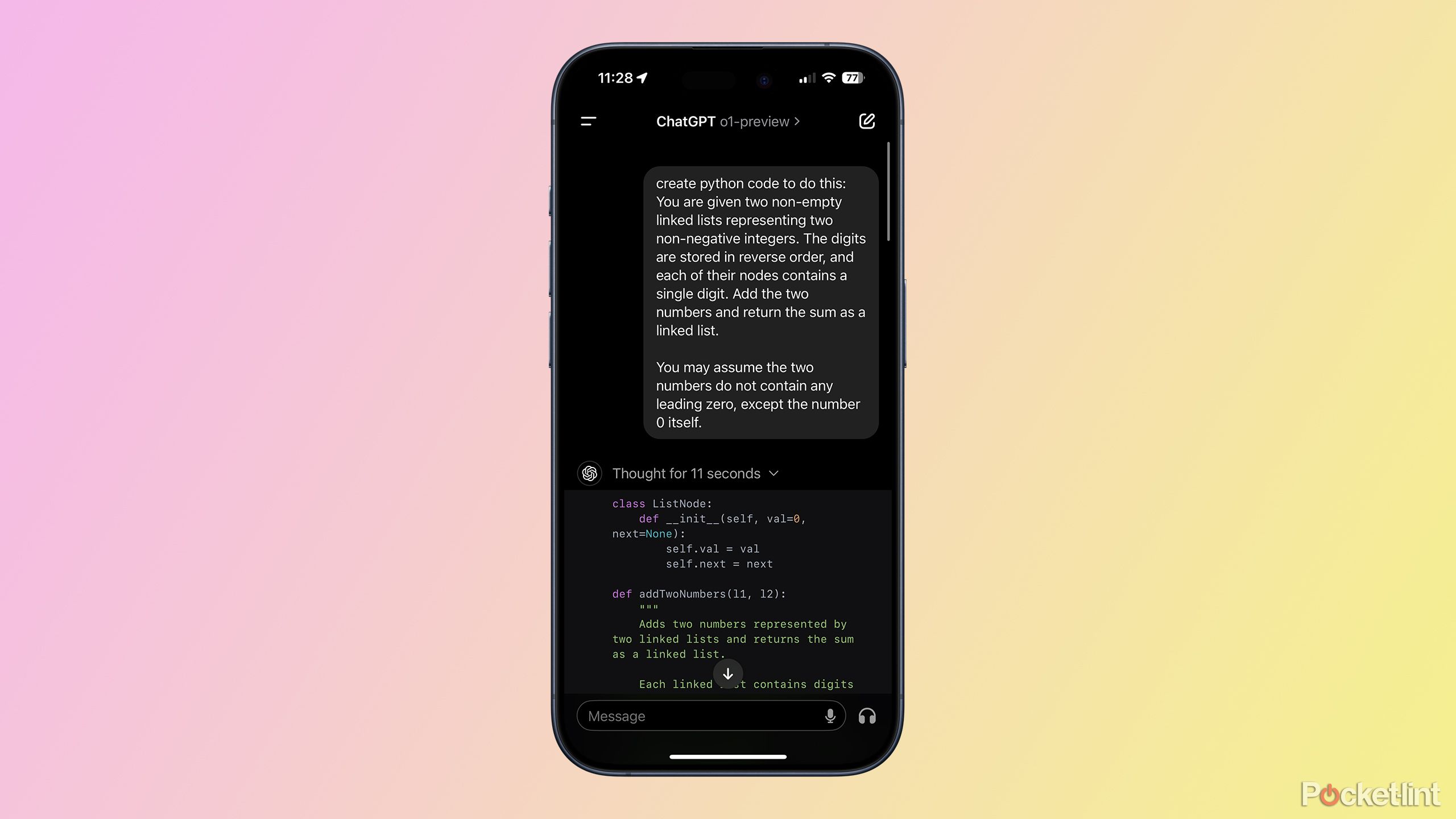

Solving coding problems using o1-preview

A significant improvement but still a way to go

AI chatbots are very good at writing simple code. You can ask GPT-4o to knock up some simple Python, and it will do so far quicker than you could ever type it out. The majority of the time, for fairly simple problems, the results are good. However, as things get more complex, the results get worse.

The o1 model is supposed to have significantly improved coding abilities, so I gave this a try too, and was suitably impressed. I chose a Medium level coding problem from the coding practice site leetcode.com and gave it to both GPT-4o and o1-preview. The problem involved finding the sum of two numbers where the digits are given in reverse order.

The code that was generated by GPT-4o worked fine except for one major issue; it generated the wrong answer. The method used was to add the two numbers as given, and then reverse the answer, which doesn’t work. The o1-preview model thought for longer, but then generated code that would produce the correct answer every time. Once again, it’s an impressive improvement on the current models.

The next model of o1 promises to take things to a new level

OpenAI has teased some stats about the next update

The new o1-preview model isn’t flawless. It doesn’t get everything right, and certainly isn’t operating at the level of PhD student. It is, however, a significant improvement on the current models, being able to solve problems that other models can’t. It does have limitations as a chatbot in its current form, however. It can’t accept image inputs or search the internet like standard models can.

However, it’s the next update to o1 that’s most exciting. OpenAI claims that the model they’re currently working on is capable of performing to a similar level as PhD students on tests in subjects such as Biology, Chemistry, and Physics, and can achieve a much more impressive score of 83% on the IMO qualifying exams, something that only a small handful of the entrants were able to do on the BMO exam that I tested it with.

The new o1-preview model isn’t flawless. It doesn’t get everything right, and certainly isn’t operating at the level of PhD student. It is, however, a significant improvement on the current models, being able to solve problems that other models can’t.

It remains to be seen how well this model performs in the real world, but it does seem that o1 represents a big step forward in how AI models tackle problems that require reasoning. We’re still a long way away from the dream of AGI (artificial general intelligence), which can reason and apply knowledge across a wide range of tasks at a similar level to a human, but this is a small step in the right direction.

Trending Products

Cooler Master MasterBox Q300L Micro-ATX Tower with Magnetic Design Dust Filter, Transparent Acrylic Side Panel, Adjustable I/O & Fully Ventilated Airflow, Black (MCB-Q300L-KANN-S00)

ASUS TUF Gaming GT301 ZAKU II Edition ATX mid-Tower Compact case with Tempered Glass Side Panel, Honeycomb Front Panel, 120mm Aura Addressable RGB Fan, Headphone Hanger,360mm Radiator, Gundam Edition

ASUS TUF Gaming GT501 Mid-Tower Computer Case for up to EATX Motherboards with USB 3.0 Front Panel Cases GT501/GRY/WITH Handle

be quiet! Pure Base 500DX ATX Mid Tower PC case | ARGB | 3 Pre-Installed Pure Wings 2 Fans | Tempered Glass Window | Black | BGW37

ASUS ROG Strix Helios GX601 White Edition RGB Mid-Tower Computer Case for ATX/EATX Motherboards with tempered glass, aluminum frame, GPU braces, 420mm radiator support and Aura Sync